Artificial intelligence (AI) is revolutionizing the way we work and do business. But with great opportunities come new responsibilities. The EU AI Act, which came into force on August 2, sets the rules for AI in Europe. As a small or medium-sized enterprise (SME), you might be wondering what this means for you. In this article, we highlight the key aspects of the EU AI Act and explain what you should be prepared for.

With the EU AI Act, the European Union aims to take a leading role in global AI regulation. As the world’s first comprehensive AI legislation, it seeks to establish a uniform legal framework for all member states. The core of the act focuses on balancing innovation with the safe and ethical use of AI technologies. The regulation uses a risk-based approach to protect the fundamental rights, safety, and health of EU citizens. At the same time, it aims to build trust in AI systems and foster responsible technological development. Special attention is given to supporting SMEs and startups, making it easier for them to comply with the new regulations while maintaining an innovation-friendly environment.

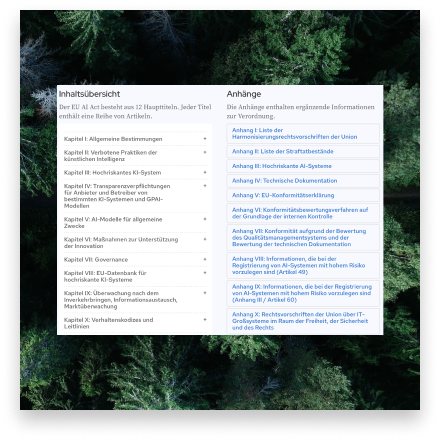

The EU AI Act introduces a risk-based approach, classifying AI systems into different categories. This classification determines the level of regulation and requirements for each system:

Unacceptable Risk

Prohibited AI practices, such as:

High Risk

AI systems that pose a significant risk to health, safety, or fundamental rights, such as:

Limited Risk

AI systems with specific transparency obligations, such as:

Minimal Risk:

AI applications that pose minimal risk, such as:

Key Aspects of Risk Categorization

The EU AI Act provides a tiered approach, adjusting obligations and support measures based on the risk level of the AI system:

Minimal Risk:

No specific obligations, but the possibility of developing voluntary codes of conduct.

Limited Risk:

Focus on transparency obligations, e.g., informing users about their interaction with an AI system.

High Risk: Extensive obligations, including conformity assessments, CE marking, quality management systems, reporting serious incidents, and cooperating with authorities.

The regulation also provides for “AI sandboxes” to support the development and testing of AI systems under real conditions in a controlled environment.

Special Consideration for SMEs and Startups

The EU AI Act outlines a comprehensive governance and enforcement framework, including:

These structures aim to ensure uniform application of the regulation across the EU. An EU-wide database for standalone high-risk AI systems will enhance transparency and monitoring.

To enforce compliance, significant fines are foreseen: up to €30 million or 6% of global annual revenue for violations. Complaint procedures for individuals and organizations are also in place to ensure effective enforcement. The regulation encourages cooperation between national authorities and the EU Commission for consistent implementation across member states.

The implementation of the EU AI Act will be gradual to give all stakeholders sufficient time to adapt:

For existing AI systems, there is a transition period until August 2026, giving companies time to adjust their systems to the new requirements.

To keep the regulation current and effective, it will be reviewed every 24 months and adjusted for technological developments and new challenges.

Reactions have been generally positive. While the initial draft faced criticism for excessive bureaucracy and competitive disadvantages, the final version simplified some aspects to avoid stifling innovation. Many believe that the AI Act, like the GDPR, will serve as a blueprint for other countries. Contrary to expectations, large companies are in favor of a certain level of regulation, as it provides clear guidelines and planning security for further development.

Find out in 10 minutes how the AI Act will affect you by answering a series of simple questions.

Search the full text of the AI Act online. Search within the law for parts that are relevant to you.

The EU AI Act presents a balanced legal framework that promotes innovation while protecting fundamental values. For most companies not working with high-risk AI, the regulation remains manageable. The risk-based approach, support for SMEs and startups, and measures like AI sandboxes demonstrate the EU’s commitment to fostering an innovation-friendly environment.

Ultimately, the regulation aims to build trust in AI and position the EU as a leader in ethical, safe AI development—without burdening the majority of AI developers with excessive bureaucracy.

“Please don’t abandon AI innovations out of unfounded concern! Simply honestly evaluate and document the AI applications used – then nothing will stand in the way of a successful deployment!”

Do you have further questions or a specific AI project for which you need support? We at eisbach partners already work a lot with generative AI, both in our own ventures and in customer projects. Just get in touch with us!

Eisbach Partners specializes in helping companies integrate artificial intelligence technologies while ensuring compliance with the latest regulations. Our team of experts is dedicated to supporting startups and SMEs in navigating the EU AI Act. We offer tailored solutions that balance innovation with legal requirements, enabling you to stay competitive while adhering to ethical and safety standards.